Science

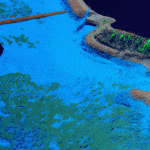

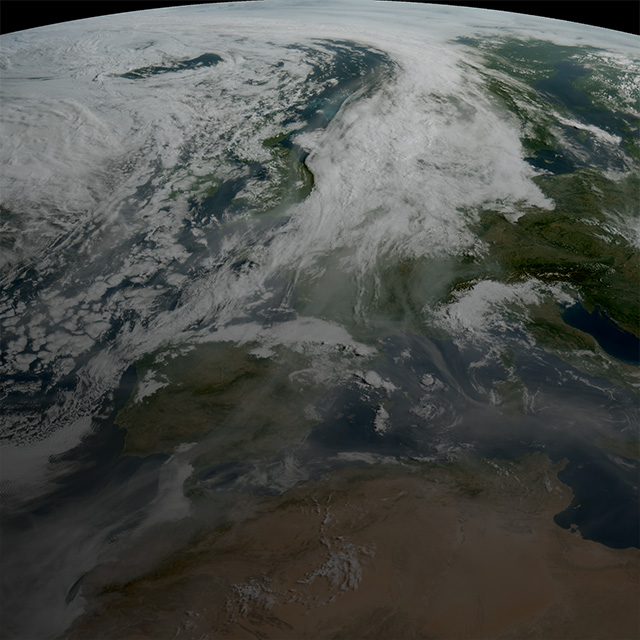

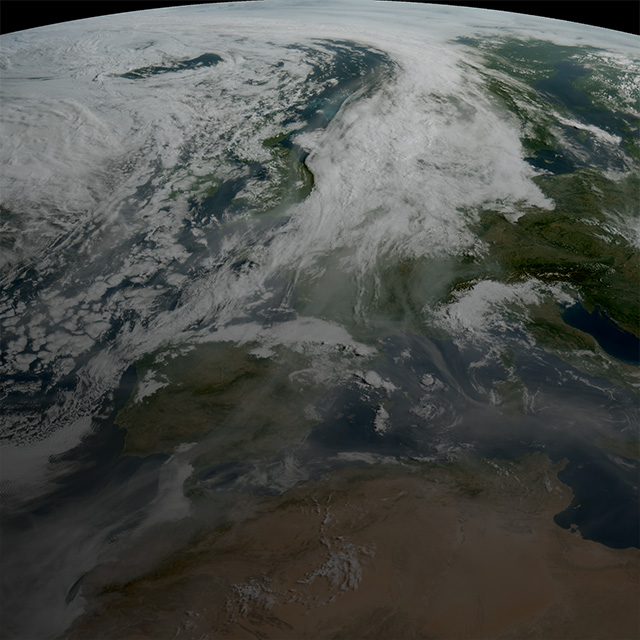

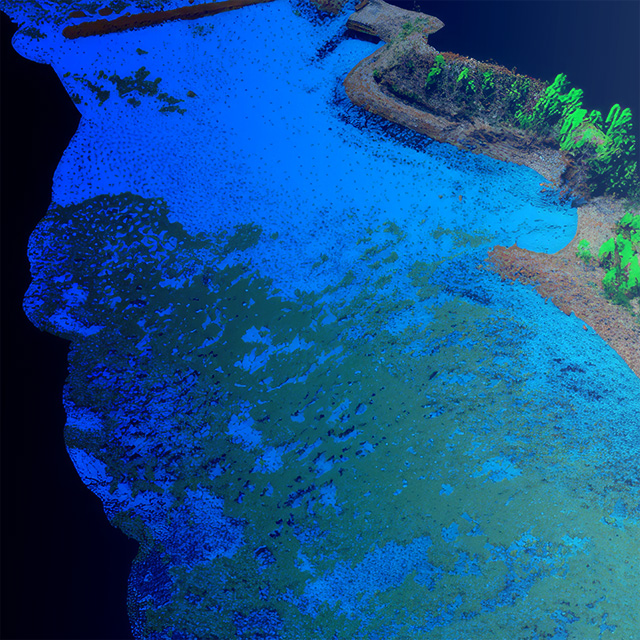

The Highest Fidelity Weather Data In History: The 3rd-Gen Meteosat Is Live

Possibility is a Hub for stories about technology, science and people shaping digital imaging. Learn more.

Don't miss out on new Possibility Hub stories & other useful information from Teledyne Imaging.

There are two ways to subscribe: